Published 2025-09-27.

Time to read: 3 minutes.

llm collection.

LM Studio can run standalone as a separate application, as a CLI, and it can run as an MCP client.

The standalone application is available for Windows, macOS, and Linux. It can run many local AI models from Hugging Face. LM Studio primarily works with models in the GGUF format, which is a specialized format optimized for running large language models on local hardware, often utilizing CPU or GPU processing. While LM Studio also supports MLX models for Apple Silicon, GGUF remains the most widely supported model format.

I ran LM Studio on a Windows 11 computer with an Intel i7-13700K CPU, 64 GB of RAM, and an NVIDIA RTX 3060 GPU with 12 GB of VRAM, and lots of NVMe SSD storage.

Under Windows, there is no integration with WSL; LM Studio only runs as a native Windows application.

Install

I ran the installer on Windows 11. When the program starts it offers a setup wizard, which is just a placeholder and does nothing. Not a good first impression.

I clicked on the small grey Skip button at the top right of the window. Then it offered help on the Search all chats feature. This was the time to offer help in loading a model and configuring the application, not searching for chats that don't exist yet.

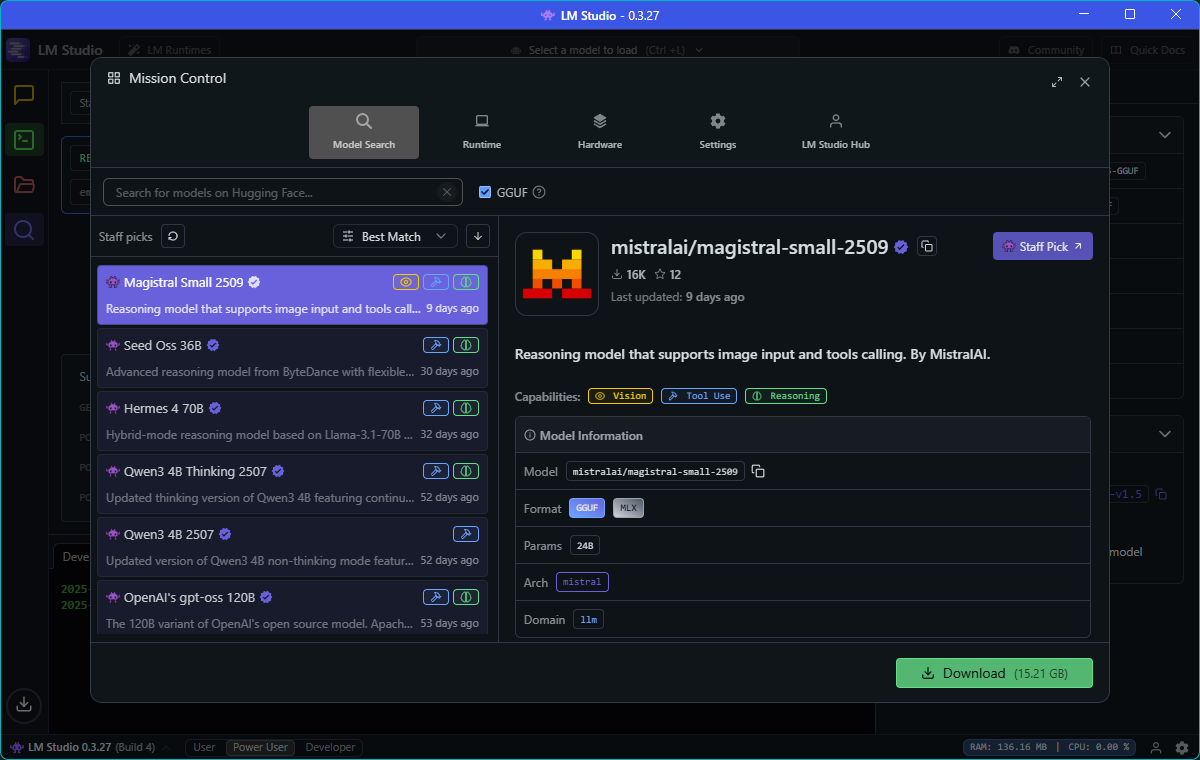

I notices three buttons at the bottom left of the window: User, Power User, and Developer. The Power User button was selected by default.

The top center of the window has a pulldown menu labeled Select a model to load (CTRL-L). When I clicked on it, it showed a list of GGUF models that I had already downloaded.

When I switched to the Chats screen, it said no LLMs had been downloaded yet.

Hmm.

It offered to download openai / gpt-oss-20b.

That seemed to be much too large for my computer.

Ignoring the Download 12.11 GB button, I tried entering text into the search bos, but no models appeared.

No matter what I typed, it said No matching results.

Moving on, I clicked on Mission Control, then

the Model Search tab.

It offered a list of models that could be downloaded.

I selected Migistral Small 2509 (magistralai/magistral-small-2509)

model and clicked the Download (15.21 GB) button.

After a few minutes, it finished downloading and I was asked if I wanted to load the model.

After loading, I was able to chat with the model.

First Chat

I asked “is lm studio as good as vs code for working with llms?”

Thought for 53.85 seconds

The choice between LM Studio and VS Code for working with LLMs depends on your specific needs:

- LM Studio is designed specifically for language models. It offers a user-friendly interface tailored for running, fine-tuning, and managing LLMs. It might be more suitable if you're looking for a dedicated tool that simplifies LLM-related tasks without much setup.

- VS Code, on the other hand, is a versatile code editor that can handle many programming languages and development tasks with the right extensions (like Python, Jupyter notebooks, etc.). If your workflow involves coding, managing datasets, or integrating LLMs into larger projects, VS Code could be more flexible, though it might require more configuration.

In summary:

- For specialized LLM work with minimal setup, LM Studio is likely better.

- For a comprehensive development environment that includes coding and other tasks, VS Code is often preferred.

Many users also use both tools together—LM Studio for quick LLM experiments and VS Code for the broader development process.

5.96 tok/sec • 549 tokens • 0.83s to first token • Stop reason: EOS Token Found

Model Directory

The default directory for downloaded models is %USERPROFILE%\.lmstudio\models.

To change that, select the models icon on the top left of the window,

and navigate to the desired directory.

No provision is made for moving models after they have been downloaded.